From a science standpoint, there's nothing incredibly complicated about a microwave oven. A thing called a magnetron generates high-power radio waves (the frequency used is about 2.5 GHz, corresponding to a wavelength on the order of centimeters), which are then directed by a waveguide into a box with dimensions set to more or less create a stable standing wave. The frequency of the generated microwaves is matched to the resonant frequency of the dipole moment of a water molecule, so water molecules exposed to the standing wave will absorb the microwaves as heat. Ideally the water molecules in question are a constituent of some kind of food you're attempting to heat up because you're too lazy to cook real dinner, so in principle this is a quick, efficient way of evenly heating (because the standing wave penetrates your entire TV dinner more or less equally) stuff that contains water.

Most microwaves don't have much in the way of controls; the main thing you can set is the cook time, although there's usually a seldom-used option to vary the microwave power too. Without exception though, they've also got a mysterious setting called "Defrost." Based on extensive observational research (I stood in front of the microwave for three whole minutes while my soup defrosted the other night) I've been able to determine that:

1) The oven was switching the microwave power on and off, or at least varying it, in cycles that got faster the longer it ran (you can hear the magnetron vibrating slightly when the power is on)

2) My soup-iceberg melted a lot more evenly than it generally does when I don't use defrost mode

Irritatingly, we actually discussed why this happens in some detail in my undergrad electromagnetics class. I hate E&M with the passion of one thousand suns though, so I either wasn't paying attention the first time or just erased that factoid in the process of purging that whole semester from memory. Either way, I had to go look it up and then felt stupid when I did.

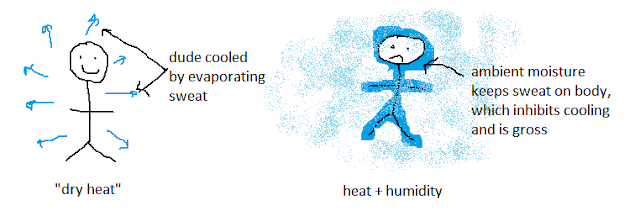

Way back when I wrote about windchill, I talked about heat always wanting to diffuse into areas where there's less heat. The same is true of water; if there's a blob of hot water molecules in a body of much cooler water, the hot water will disperse until the whole volume of water is the same temperature (this usually happens pretty quickly but, as anyone who's ever swam through a mysterious warm region in their community pool knows, not instantaneously). The point here is that water is really good at dispersing heat.

So to tie that back to our microwave, my frozen soup is almost entirely made of ice. Obviously ice and water are chemically identical, but in ice the molecules are locked in place whereas in water they're free to go wherever. So when you start blasting away at a chunk of ice with microwave energy, you'll still heat up the water molecules but they won't be able to go move around and spread the heat out evenly; ice heats up much slower than water in a microwave for this reason.

That's problematic if you're trying to melt ice evenly with microwaves. Because the power in a microwave is slightly (or sometimes drastically) different at different points in the box, some of your ice is going to get heated up much more quickly than the rest of it, and that heat is going to stay pretty much where it is. The problem becomes even worse when you actually manage to melt some of the ice at some point: now you've got a blob of water which, as we discussed, heats up much faster than ice. So while you're trying to melt the rest of that ice, the water is getting hotter and hotter, and also melting the ice adjacent to it to create an enlarging pocket of "runaway heating." The end result, when all the ice is finally melted, some regions of your food have been cooking for several minutes, while some just defrosted seconds ago. Since it's all liquid now the temperature will even out pretty fast, but there's going to be a huge variation in how much "bonus cooking" different bits of the soup received. Nobody wants that.

Defrost mode is an attempt to get around this problem by cycling the microwave power on and off. While it's on, the ice is absorbing heat unevenly, but during the off cycle that heat has a chance to diffuse and reduce those temperature variations (heat will conduct through ice, just not nearly as fast as it conducts through liquid water). Initially the "off" cycles are much longer than the "on" cycles, but as the food gets heated up closer to melting (which raises its thermal conductivity) you can get away with much longer "on" and much shorter "off" cycles without things getting too nonuniform.

It's a far from perfect solution, the main problem being that most microwaves calibrate the length of the on/off cycles by asking you to input the total mass of water you want to heat up. So unless you have a scale in your kitchen specifically for weighing blocks of frozen food (do you? we don't) you're just going to end up guessing, and if you're off by much the calibration will be wrong and the whole thing won't work nearly as well. Even if you're only in the ballpark with the weight though, you're probably going to get a much more uniform defrost than you would by just running the microwave in always-on mode.

You can extrapolate from all this the reason that microwaves cook things so notoriously unevenly: most food is going to be made up of several different ingredients, all of which will contain varying amounts of water and have different thermal conductivities. Since heating in a microwave is directly proportional to the amount of water that's there to absorb microwave power, that means all your ingredients will heat at different rates even though they're (in principle) exposed to the same amount of microwave power. That's why even with modern microwaves, which have the little rotating plate at the bottom to make sure no part of your food stays in a dead spot, hardly anything ever tastes quite right after heating. As we've already established my basic hatred and ignorance when it comes to all things electromagnetic-y, I have no idea how to solve this problem, besides saying "use a real oven, lazy-ass."