- Blue

- Yellow

- Kind of reddish-pink

- White

Anyway, since all of those colors are coming from the same broadband light source (the sun, which you may have encountered at some point), I always just figured there was a lot of light filtering and absorption in the atmosphere that cut out certain colors and let others pass. The problem is that the atmosphere is always pretty much the same, but apparently it'll let totally different frequencies of light hit your eyes depending on the circumstances (blue in the daytime, red at sunset, which pretty much covers the whole visible spectrum). This is probably something I learned in elementary school, but apparently somewhere in two-odd decades of cramming my brain with largely useless facts about electricity, as well as any unguarded alcohol that happened to be lying around, that knowledge got lost. Luckily we have an internet now.

As I said above, I'm not a complete idiot (usually). I know as far as we're concerned here on earth, the sun has a radiation spectrum similar to any other black body, with a center frequency somewhere toward the low end of the center of the visible spectrum, meaning it contains various amounts of all visible wavelengths. In non-science words, that means it emits what's basically a whitish-yellow light. You can independently verify this by looking directly at the sun, which I recommend doing on a clear, bright day to maximize the effect.

So then why, during a clear day, does the part of the sky not currently being occupied by the sun look a whitish blue? My initial, typically wrong guess would have been "because the atmosphere is absorbing all the photons but the blue ones." The problem with that is that 1) We already know photons from most of the visible spectrum can get through the atmosphere just fine, from that looking-at-the-sun experiment we did earlier (which you probably did wrong if you can still read this, incidentally), and 2) higher-frequency light, like blue and UV, tends to be absorbed by things a lot more easily than lower-frequency light like green, red, and infrared (there are exceptions to this rule of thumb, but they're uncommon enough that various people have gotten rich discovering them). So if absorption was the culprit, we'd expect the sky to look kind of reddish-yellow during the day instead of blue, which it clearly does not.

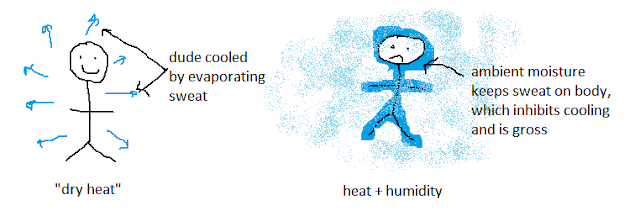

So what's the deal? It turns out I was close, kind of. low-frequency (reddish to greenish) photons pass through our atmosphere pretty much unimpeded, meaning any that hit your eyes if you're looking up at the sky probably came on a more or less straight path from the sun. Higher-frequency (blue and UV) photons, on the other hand, are going to interact with the nitrogen, oxygen, and argon in the atmosphere quite a bit, but instead of being absorbed and turned into heat they'll get scattered.

Like most things in science, if you ignore the horrific mathematical and physical models that govern the specifics of it, scattering is conceptually very simple: photon hits atom, photon bounces off atom at some other angle, photon hits yet another atom, wash rinse repeat. So any blue photon that makes it to your eyes (or UV photon that makes it to the back of my neck) is going to have been rattling around in the atmosphere for awhile, and as a result could be coming from pretty much anywhere in the sky. So when you look directly at the sun, you're seeing the photons that are low-energy enough to pass through the atmosphere without scattering, while if you look elsewhere in the sky you're seeing the ones that scattered all over the place when they hit, instead of going straight from the sun to your eye. Incidentally, this is why you can still get sunburned without direct sunlight; the UV rays that burn follow the same rules as blue light, and as a result can come from anywhere in the atmosphere.

|

| This guy's looking right at the sun, and look how happy he is! Come on, try it! |

So alright, that mystery's solved. What about sunset though? At sunset we see both the sun going from a yellow to reddish color and the sky going from a blue to red-pinkish color, suggesting that the spectrum of photons getting to us is changing dramatically and inspiring countless terrible poems over the centuries.

The answer is my least favorite thing in the whole world: trigonometry. When the sun is directly overhead, it has exactly one atmosphere between it and you. That means the light from the sun only has to get through one atmosphere to make it to your eyes. When the sun is setting near the horizon on the other hand, the fact that there's only a small angle between the sun and where you're standing means the light has to go through the equivalent of more than one, sometimes as many as two, atmospheres (see diagram). This extra atmospheric travel time means that most of those scattering blue photons aren't going to make it to where you're standing; they'll end up hitting some other lucky person who's standing under where the sun is right now. So what's left is basically just the non-scattered reddish sunlight, which is the only thing illuminating the sky and therefore not washed out by scattered blues the way it would be during the day. This also explains why the sun itself looks redder; the atmospheric travel distance is so long that even some of the midrange (green) frequencies are going to get scattered away, meaning the only photons still making it directly from the sun to your eye are mostly red.

|

| Distances somewhat exaggerated, both for emphasis and because I'm bad at MSPaint |

So last quick question: why do clouds appear white? You can probably work this one out for yourself by applying what we've already learned, but since I had to look it up maybe it isn't as obvious as it seems in hindsight. Anyway, clouds are made of water vapor. Water molecules are way bigger than the other things in the atmosphere, and also clumped together pretty closely if there are enough of them to make a visible cloud. Long story short, enough water vapor will scatter any visible photons hitting it more or less equally, which means that what gets to your eyes when you look at a cloud is roughly the same amount of everything, even though that's not what the solar spectrum looks like. Combine equal amounts of all frequencies of visible light and you get...white! Darker clouds look darker because they're taller, which means a lot of the light hitting them never gets to you at all, just scattered out the top or sides where you'll never get to see it.

We could have come at this from a different direction: if my initial hypothesis (atmospheric absorption) had been correct, most or all of the substantial amount of energy in the light the sun is continuously dumping on the earth would get absorbed as heat by the atmosphere, meaning the place would get really goddamn hot (like melt-lead hot) really fast. To put it in some perspective, the estimated amount of "greenhouse gases" like carbon dioxide and methane (which DO absorb heat from the sun) that are in the atmosphere right now is something like less than a thousandth of the percent of the total atmosphere, and that's already causing us some problems at the moment.

Thanks to Wikipedia and usually terrible sci-fi site io9 for this one!